Voice Assistants

Most commercially available voice assistants use cloud-based speech recognition to convert audio into actions or queries. In order to function properly, devices that provide access to voice assistance services must generally upload a recording of the user’s voice to a remote server for processing. The server can retain the recorded audio sample for an indefinite period of time, adding to the privacy implications posted by this technology.

Operation

Voice assistants work by receiving spoken audio, converting that audio into a text string, and then processing that text string to produce a result. The result, which is also a text string, is then converted back into audio using a text-to-speech engine. While it is possible to use a local voice recognition model to convert speech into text, running such a model requires significant computational resources. Smart phones and smart speakers do not usually have processors (or power supplies) capable of supporting voice processing locally, so they instead transmit a recording of the voice audio to a remote server for processing.

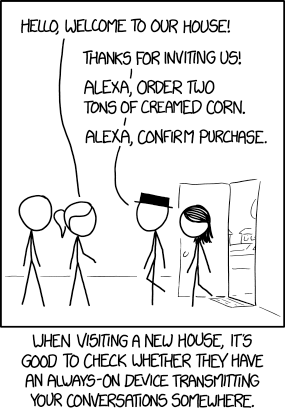

As illustrated in Figure 1, smart speakers for voice assistance have become common in homes. Examples include Amazon Echo2 and Google Home3 devices, which include dedicated smart speakers with built-in microphones, smartphone apps that use the phone’s microphone, household appliances that have microphones, and even thermostats and similar built-in equipment that can be microphone-equipped. The companies marketing these devices are actively encouraging people to place microphones throughout their homes.

Surveillance

A key issue with voice assistance technology is that it has to be kept in an always-listening mode in order to be effective. The microphones in these devices are therefore always active. With newer generation devices, some processing is at least performed on the device locally to detect a special word or phrase, which then triggers the device to transmit the audio recording to a remote server for processing the voice request. In theory, this approach of using local processing to activate the assistant is a way to mitigate the threat of an always-listening microphone. In practice, however, the limited capabilities of the on-device processor results in a significant number of false positive detections, which causes these devices to transmit unintentionally recorded audio to the remove server about once an hour on average.4

Intentional interactions with the voice assistant are mined for data and used to target advertising in the same way as Internet search history or television/game console interactions. One could argue that this outcome is expected, since the user is knowingly sending a voice recording to the assistance service. Unintentional activations represent a more significant threat to privacy, since the user isn’t aware that the content of their speech is being recorded and transmitted. Nothing stops companies from collecting data from these unintentional activations.

Whenever a voice assistant is activated, whether intentionally or unintentionally, the service provider handling voice recognition may retain a recording of the original audio for an indefinite amount of time. Law enforcement can obtain these recordings through various mechanisms, likely requiring only a subpoena. Some of the companies operating these services might initially push back and demand a court order. However, prior cases demonstrate that the court is likely to grant the order.5 Contrary to popular belief, the police do not require a warrant to obtain these voice recordings, since the Fourth Amendment is not implicated. A user who willfully installs a microphone that is capable of transmitting audio to a private company agrees to share their audio data with that company. Data voluntarily shared with a company becomes a business record and is therefore no longer protected against unreasonable search or seizure.

Mitigating the Threat

The best way to mitigate the threat of a voice assistant is not to use one at all. Much greater privacy can be obtained by using a properly configured Web browser to look up the same information or perform the same tasks as a voice assistant. For individuals with disabilities, a self-hosted solution like Willow6 would offer greater privacy than a cloud-based solution. That said, for some populations (with disabilities, senior citizens, etc.), the benefits of a commercial voice assistant might outweigh the privacy implications.

Notes and References

-

Amazon Echo (device search results). Amazon. ↩

-

A smarter home starts with Google Home. Google. ↩

-

Allen St. John. “Yes, Your Smart Speaker Is Listening When It Shouldn’t.” Consumer Reports. July 9, 2020. ↩

-

Zack Whittaker. “Judge orders Amazon to turn over Echo recordings in double murder case.” TechCrunch. November 14, 2018. ↩